How to Read Html Source Code Python

Tutorial: Spider web Scraping with Python Using Beautiful Soup

Published: March thirty, 2021

Learn how to scrape the web with Python!

The internet is an absolutely massive source of data — data that we can admission using web scraping and Python!

In fact, web scraping is often the only way we tin can access data. There is a lot of information out in that location that isn't available in convenient CSV exports or easy-to-connect APIs. And websites themselves are ofttimes valuable sources of data — consider, for example, the kinds of analysis you could do if you lot could download every post on a spider web forum.

To admission those sorts of on-page datasets, we'll accept to use web scraping.

Don't worry if you're still a full beginner!

In this tutorial nosotros're going to cover how to do web scraping with Python from scratch, starting with some answers to frequently-asked questions.

Then, nosotros'll work through an actual web scraping project, focusing on weather data.

We'll work together to scrape weather data from the web to back up a weather condition app.

But before nosotros start writing any Python, we've got to cover the nuts! If yous're already familiar with the concept of web scraping, feel free to scroll past these questions and jump correct into the tutorial!

The Fundamentals of Web Scraping:

What is Spider web Scraping in Python?

Some websites offer data sets that are downloadable in CSV format, or attainable via an Application Programming Interface (API). But many websites with useful information don't offer these convenient options.

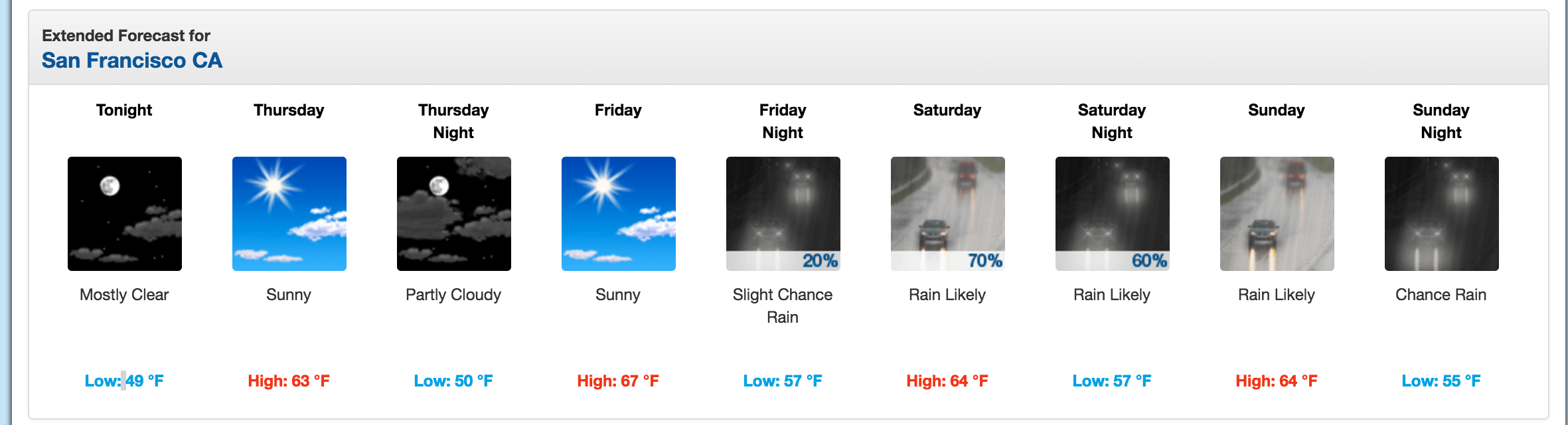

Consider, for example, the National Weather Service's website. It contains up-to-date weather forecasts for every location in the The states, but that weather condition information isn't accessible as a CSV or via API. It has to be viewed on the NWS site:

If we wanted to analyze this data, or download it for apply in some other app, we wouldn't want to painstakingly copy-paste everything. Web scraping is a technique that lets us use programming to exercise the heavy lifting. We'll write some code that looks at the NWS site, grabs just the data we want to work with, and outputs it in the format we need.

In this tutorial, we'll show yous how to perform spider web scraping using Python 3 and the Beautiful Soup library. We'll be scraping weather condition forecasts from the National Weather Service, and so analyzing them using the Pandas library.

But to exist clear, lots of programming languages can be used to scrape the web! We too teach spider web scraping in R, for example. For this tutorial, though, we'll be sticking with Python.

How Does Web Scraping Work?

When we scrape the web, we write code that sends a request to the server that's hosting the page we specified. The server will return the source lawmaking — HTML, mostly — for the folio (or pages) we requested.

So far, we're substantially doing the same affair a web browser does — sending a server asking with a specific URL and request the server to return the lawmaking for that page.

Only dissimilar a web browser, our spider web scraping code won't translate the page's source code and display the page visually. Instead, we'll write some custom code that filters through the page's source lawmaking looking for specific elements we've specified, and extracting whatever content we've instructed it to excerpt.

For example, if nosotros wanted to go all of the data from inside a table that was displayed on a spider web page, our code would be written to go through these steps in sequence:

- Asking the content (source lawmaking) of a specific URL from the server

- Download the content that is returned

- Place the elements of the page that are part of the tabular array we want

- Extract and (if necessary) reformat those elements into a dataset nosotros can clarify or use in whatsoever way nosotros crave.

If that all sounds very complicated, don't worry!Python and Beautiful Soup accept built-in features designed to brand this relatively straightforward.

1 thing that's important to note: from a server'due south perspective, requesting a page via web scraping is the same every bit loading it in a web browser. When we use code to submit these requests, we might be "loading" pages much faster than a regular user, and thus quickly eating up the website possessor's server resources.

Why Utilise Python for Web Scraping?

As previously mentioned, it'due south possible to do spider web scraping with many programming languages.

However, i of the nearly popular approaches is to use Python and the Beautiful Soup library, as we'll do in this tutorial.

Learning to do this with Python volition hateful that in that location are lots of tutorials, how-to videos, and $.25 of example code out at that place to assist yous deepen your knowledge once you've mastered the Beautiful Soup basics.

Is Web Scraping Legal?

Unfortunately, there's not a cut-and-dry answer here. Some websites explicitly allow web scraping. Others explicitly preclude information technology. Many websites don't offering any clear guidance one way or the other.

Before scraping any website, we should await for a terms and weather condition folio to run into if there are explicit rules about scraping. If there are, we should follow them. If there are not, then information technology becomes more of a judgement call.

Recall, though, that web scraping consumes server resources for the host website. If we're just scraping one page once, that isn't going to cause a problem. But if our code is scraping 1,000 pages in one case every 10 minutes, that could quickly get expensive for the website possessor.

Thus, in addition to post-obit any and all explicit rules well-nigh web scraping posted on the site, it's likewise a good idea to follow these best practices:

Spider web Scraping Best Practices:

- Never scrape more ofttimes than you need to.

- Consider caching the content yous scrape then that it's merely downloaded once.

- Build pauses into your code using functions like

time.sleep()to continue from overwhelming servers with too many requests too quickly.

In our instance for this tutorial, the NWS'southward data is public domain and its terms do not prevent web scraping, so we're in the clear to keep.

Acquire Python the Right Way.

Acquire Python by writing Python code from 24-hour interval 1, correct in your browser window. It's the best way to learn Python — see for yourself with one of our 60+ complimentary lessons.

Try Dataquest

The Components of a Web Folio

Before we start writing code, we demand to understand a picayune bit nearly the construction of a spider web folio. We'll use the site's structure to write code that gets us the information we desire to scrape, so understanding that structure is an important starting time pace for any spider web scraping project.

When we visit a spider web page, our web browser makes a asking to a web server. This request is chosen a GET request, since nosotros're getting files from the server. The server then sends back files that tell our browser how to return the page for us. These files will typically include:

- HTML — the primary content of the page.

- CSS — used to add together styling to brand the page expect nicer.

- JS — Javascript files add interactivity to web pages.

- Images — image formats, such as JPG and PNG, allow web pages to show pictures.

Subsequently our browser receives all the files, it renders the page and displays it to the states.

There'south a lot that happens behind the scenes to return a folio nicely, simply we don't demand to worry nearly most of information technology when nosotros're web scraping. When nosotros perform web scraping, we're interested in the main content of the web page, and so we wait primarily at the HTML.

HTML

HyperText Markup Language (HTML) is the linguistic communication that web pages are created in. HTML isn't a programming linguistic communication, like Python, though. It'southward a markup language that tells a browser how to brandish content.

HTML has many functions that are similar to what y'all might notice in a give-and-take processor similar Microsoft Word — it can make text bold, create paragraphs, and so on.

If you're already familiar with HTML, feel free to jump to the adjacent section of this tutorial. Otherwise, permit'southward accept a quick tour through HTML so we know enough to scrape effectively.

HTML consists of elements called tags. The most basic tag is the <html> tag. This tag tells the web browser that everything within of information technology is HTML. We can brand a simple HTML certificate just using this tag:

<html> </html> We haven't added any content to our page notwithstanding, so if we viewed our HTML document in a web browser, nosotros wouldn't see anything:

Right within an html tag, we tin put two other tags: the head tag, and the body tag.

The main content of the web page goes into the body tag. The head tag contains data virtually the title of the folio, and other information that generally isn't useful in spider web scraping:

<html> <head> </head> <trunk> </body> </html> We still haven't added any content to our page (that goes inside the body tag), so if nosotros open this HTML file in a browser, we still won't meet anything:

You may take noticed above that we put the caput and body tags inside the html tag. In HTML, tags are nested, and tin go inside other tags.

We'll now add together our kickoff content to the page, inside a p tag. The p tag defines a paragraph, and any text within the tag is shown every bit a separate paragraph:

<html> <caput> </head> <body> <p> Here's a paragraph of text! </p> <p> Here's a second paragraph of text! </p> </torso> </html> Rendered in a browser, that HTML file will look like this:

Here'southward a paragraph of text!

Here'southward a second paragraph of text!

Tags accept commonly used names that depend on their position in relation to other tags:

-

child— a kid is a tag inside another tag. So the twoptags above are both children of thebodytag. -

parent— a parent is the tag another tag is inside. Above, thehtmltag is the parent of thebodytag. -

sibiling— a sibiling is a tag that is nested within the same parent every bit another tag. For case,headandtorsoare siblings, since they're both insidehtml. Bothptags are siblings, since they're both insidebody.

Nosotros can likewise add together properties to HTML tags that change their behavior. Below, we'll add some actress text and hyperlinks using the a tag.

<html> <caput> </caput> <body> <p> Here'due south a paragraph of text! <a href="https://world wide web.dataquest.io">Larn Data Science Online</a> </p> <p> Hither'southward a 2nd paragraph of text! <a href="https://www.python.org">Python</a> </p> </trunk></html> Here's how this will expect:

Here'south a paragraph of text! Larn Data Scientific discipline Online

Here's a 2d paragraph of text! Python

In the above example, nosotros added two a tags. a tags are links, and tell the browser to return a link to another web page. The href property of the tag determines where the link goes.

a and p are extremely common html tags. Here are a few others:

-

div— indicates a sectionalization, or area, of the folio. -

b— bolds any text within. -

i— italicizes any text inside. -

tabular array— creates a table. -

form— creates an input form.

For a full list of tags, look here.

Before we move into actual web scraping, let'south learn about the form and id properties. These special properties requite HTML elements names, and brand them easier to interact with when we're scraping.

1 element can take multiple classes, and a class can be shared between elements. Each element tin but have one id, and an id tin can only exist used once on a folio. Classes and ids are optional, and not all elements will have them.

We tin can add classes and ids to our example:

<html> <caput> </head> <torso> <p class="bold-paragraph"> Here's a paragraph of text! <a href="https://www.dataquest.io" id="learn-link">Acquire Data Science Online</a> </p> <p class="bold-paragraph extra-large"> Here's a 2nd paragraph of text! <a href="https://world wide web.python.org" form="actress-big">Python</a> </p> </body> </html> Hither's how this volition expect:

Here's a paragraph of text! Learn Data Science Online

Here'due south a second paragraph of text! Python

As y'all can run across, adding classes and ids doesn't change how the tags are rendered at all.

The requests library

Now that we understand the structure of a web folio, information technology'south fourth dimension to get into the fun part: scraping the content we want!

The get-go thing we'll need to practise to scrape a web page is to download the folio. We can download pages using the Python requests library.

The requests library will make a Become request to a web server, which will download the HTML contents of a given web page for usa. There are several different types of requests we tin make using requests, of which Become is merely one. If you lot want to learn more, cheque out our API tutorial.

Let'southward effort downloading a simple sample website, https://dataquestio.github.io/web-scraping-pages/simple.html.

We'll demand to first import the requests library, and then download the folio using the requests.become method:

import requests page = requests.get( "https://dataquestio.github.io/web-scraping-pages/simple.html" ) page <Response [200]> Subsequently running our request, we go a Response object. This object has a status_code property, which indicates if the page was downloaded successfully:

page.status_code 200 A status_code of 200 ways that the page downloaded successfully. We won't fully dive into status codes hither, just a status code starting with a 2 mostly indicates success, and a lawmaking starting with a 4 or a 5 indicates an error.

Nosotros can print out the HTML content of the page using the content property:

page.content <!DOCTYPE html> <html> <head> <championship>A unproblematic example page</championship> </head> <body> <p>Here is some uncomplicated content for this page.</p> </body> </html> Parsing a folio with BeautifulSoup

As you can meet above, we now take downloaded an HTML document.

We can use the BeautifulSoup library to parse this document, and extract the text from the p tag.

We first accept to import the library, and create an instance of the BeautifulSoup form to parse our document:

from bs4 import BeautifulSoup soup = BeautifulSoup(page.content, 'html.parser' ) We can at present print out the HTML content of the folio, formatted nicely, using the prettify method on the BeautifulSoup object.

impress (soup.prettify( ) ) <!DOCTYPE html> <html> <head> <championship>A unproblematic example page</title> </head> <body> <p>Hither is some simple content for this page.</p> </body> </html> This pace isn't strictly necessary, and we won't ever bother with it, only it tin can be helpful to look at prettified HTML to make the structure of the and where tags are nested easier to encounter.

As all the tags are nested, we can motility through the structure one level at a time. We can first select all the elements at the top level of the page using the children property of soup.

Note that children returns a list generator, then we need to phone call the list function on information technology:

list(soup.children) ['html', 'north', <html> <caput> <title>A uncomplicated example page</championship> </head> <body> <p>Hither is some unproblematic content for this page.</p> </body> </html>] The above tells us that there are two tags at the top level of the folio — the initial <!DOCTYPE html> tag, and the <html> tag. There is a newline character (n) in the list also. Allow's encounter what the type of each element in the list is:

[type(particular) for particular in list(soup.children) ] [bs4.element.Doctype, bs4.element.NavigableString, bs4.chemical element.Tag] As we can come across, all of the items are BeautifulSoup objects:

- The start is a

Doctypeobject, which contains information about the blazon of the document. - The second is a

NavigableString, which represents text institute in the HTML certificate. - The terminal item is a

Tagobject, which contains other nested tags.

The most important object blazon, and the one we'll deal with well-nigh ofttimes, is the Tag object.

The Tag object allows united states of america to navigate through an HTML document, and extract other tags and text. You can learn more about the various BeautifulSoup objects here.

We tin can now select the html tag and its children by taking the third particular in the list:

html = list(soup.children) [ 2 ] Each item in the list returned by the children property is also a BeautifulSoup object, and then we tin also telephone call the children method on html.

Now, we can find the children inside the html tag:

list(html.children) ['n', <head> <title>A simple example page</title> </caput>, 'north', <body> <p>Hither is some simple content for this folio.</p> </body>, 'n'] As nosotros tin come across above, at that place are two tags here, caput, and body. We want to excerpt the text within the p tag, so nosotros'll dive into the body:

body = list(html.children) [ 3 ] Now, we can become the p tag past finding the children of the trunk tag:

list(body.children) ['n', <p>Hither is some unproblematic content for this page.</p>, 'n'] We can at present isolate the p tag:

p = list(body.children) [ 1 ] Once we've isolated the tag, nosotros can use the get_text method to extract all of the text inside the tag:

p.get_text( ) 'Here is some unproblematic content for this page.' Finding all instances of a tag at one time

What we did above was useful for figuring out how to navigate a page, but it took a lot of commands to exercise something fairly simple.

If we want to extract a unmarried tag, nosotros tin instead use the find_all method, which volition observe all the instances of a tag on a page.

soup = BeautifulSoup(page.content, 'html.parser' ) soup.find_all( 'p' ) [<p>Here is some simple content for this page.</p>] Note that find_all returns a listing, and so we'll have to loop through, or apply list indexing, it to extract text:

soup.find_all( 'p' ) [ 0 ] .get_text( ) 'Here is some simple content for this page.' f yous instead merely want to find the first instance of a tag, yous can use the find method, which will return a single BeautifulSoup object:

soup.find( 'p' ) <p>Hither is some simple content for this page.</p> Searching for tags by class and id

We introduced classes and ids before, but it probably wasn't articulate why they were useful.

Classes and ids are used by CSS to decide which HTML elements to apply sure styles to. Simply when we're scraping, nosotros can also use them to specify the elements we want to scrape.

To illustrate this principle, we'll piece of work with the following folio:

<html> <caput> <title>A simple example page</title> </head> <body> <div> <p class="inner-text first-item" id="kickoff"> First paragraph. </p> <p class="inner-text"> Second paragraph. </p> </div> <p class="outer-text commencement-item" id="2d"> <b> Outset outer paragraph. </b> </p> <p form="outer-text"> <b> Second outer paragraph. </b> </p> </body> </html> We can access the above document at the URL https://dataquestio.github.io/web-scraping-pages/ids_and_classes.html.

Let's first download the page and create a BeautifulSoup object:

page = requests.go( "https://dataquestio.github.io/spider web-scraping-pages/ids_and_classes.html" ) soup = BeautifulSoup(folio.content, 'html.parser' ) soup <html> <head> <title>A elementary instance folio </title> </head> <trunk> <div> <p class="inner-text first-particular" id="first"> Starting time paragraph. </p><p class="inner-text"> Second paragraph. </p></div> <p course="outer-text first-item" id="second"><b> Get-go outer paragraph. </b></p><p class="outer-text"><b> Second outer paragraph. </b> </p> </body> </html> Now, we can employ the find_all method to search for items by class or by id. In the beneath instance, nosotros'll search for whatsoever p tag that has the class outer-text:

soup.find_all( 'p' , class_= 'outer-text' ) [<p course="outer-text first-item" id="second"> <b> Showtime outer paragraph. </b> </p>, <p class="outer-text"> <b> Second outer paragraph. </b> </p>] In the below instance, we'll expect for any tag that has the course outer-text:

soup.find_all(class_= "outer-text" ) <p form="outer-text outset-item" id="2nd"> <b> First outer paragraph. </b> </p>, <p class="outer-text"> <b> Second outer paragraph. </b> </p>] We can also search for elements by id:

soup.find_all(id= "get-go" ) [<p form="inner-text first-item" id="first"> Get-go paragraph. </p>] Using CSS Selectors

We can also search for items using CSS selectors. These selectors are how the CSS language allows developers to specify HTML tags to style. Here are some examples:

-

p a— finds allatags inside of aptag. -

trunk p a— finds allatags inside of aptag inside of abodytag. -

html body— finds allbodytags inside of anhtmltag. -

p.outer-text— finds allptags with a class ofouter-text. -

p#offset— finds allptags with an id offirst. -

body p.outer-text— finds whateverptags with a grade ofouter-textwithin of abodytag.

You can larn more most CSS selectors here.

BeautifulSoup objects support searching a folio via CSS selectors using the select method. We can use CSS selectors to find all the p tags in our page that are within of a div like this:

soup.select( "div p" ) [<p class="inner-text first-detail" id="first"> First paragraph. </p>, <p course="inner-text"> Second paragraph. </p>] Note that the select method above returns a list of BeautifulSoup objects, just like find and find_all.

Downloading weather condition information

We at present know enough to proceed with extracting information almost the local weather from the National Weather Service website!

The first step is to detect the page we want to scrape. We'll extract weather information about downtown San Francisco from this folio.

Specifically, allow's extract data near the extended forecast.

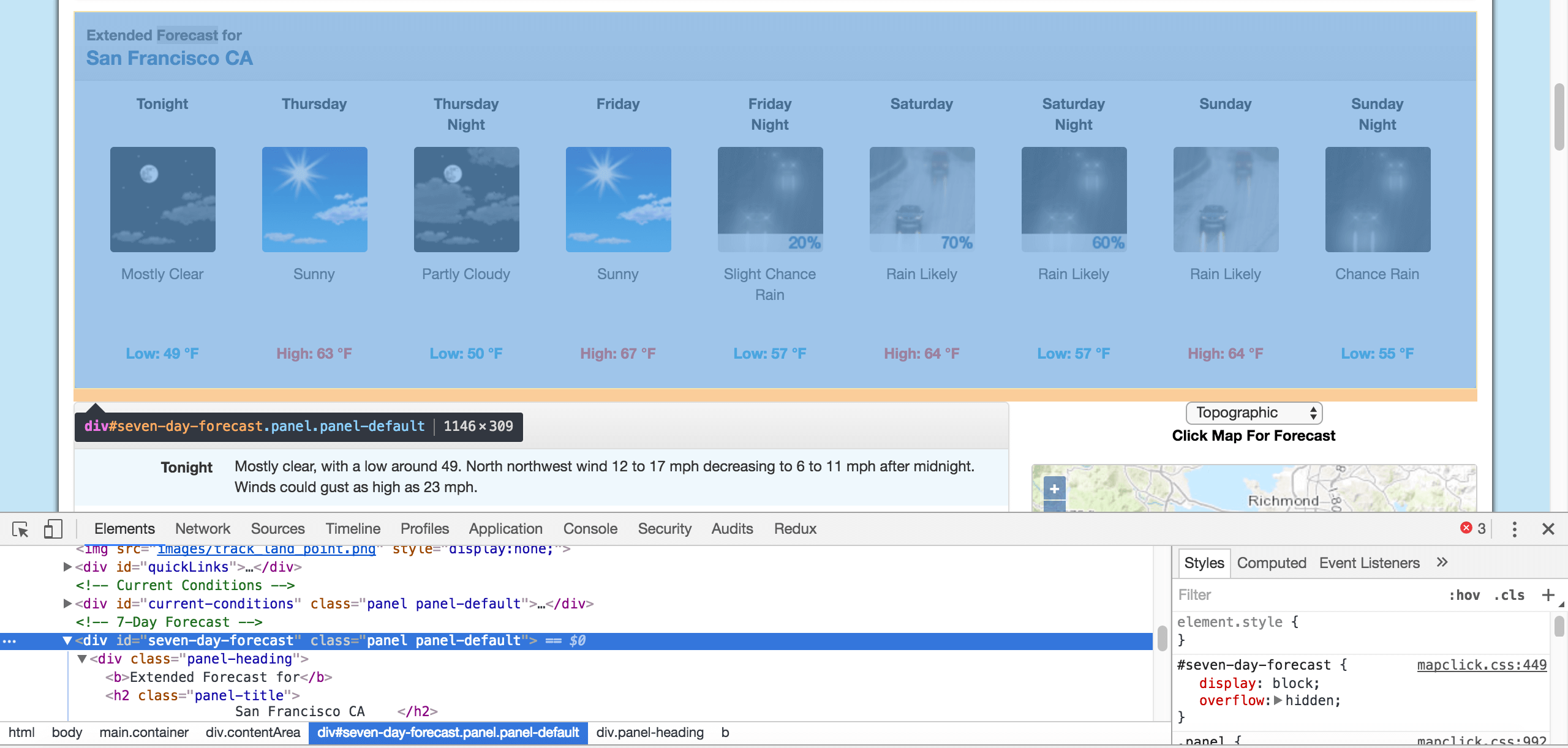

As we can see from the paradigm, the page has information nearly the extended forecast for the next calendar week, including fourth dimension of 24-hour interval, temperature, and a brief description of the weather.

Exploring folio structure with Chrome DevTools

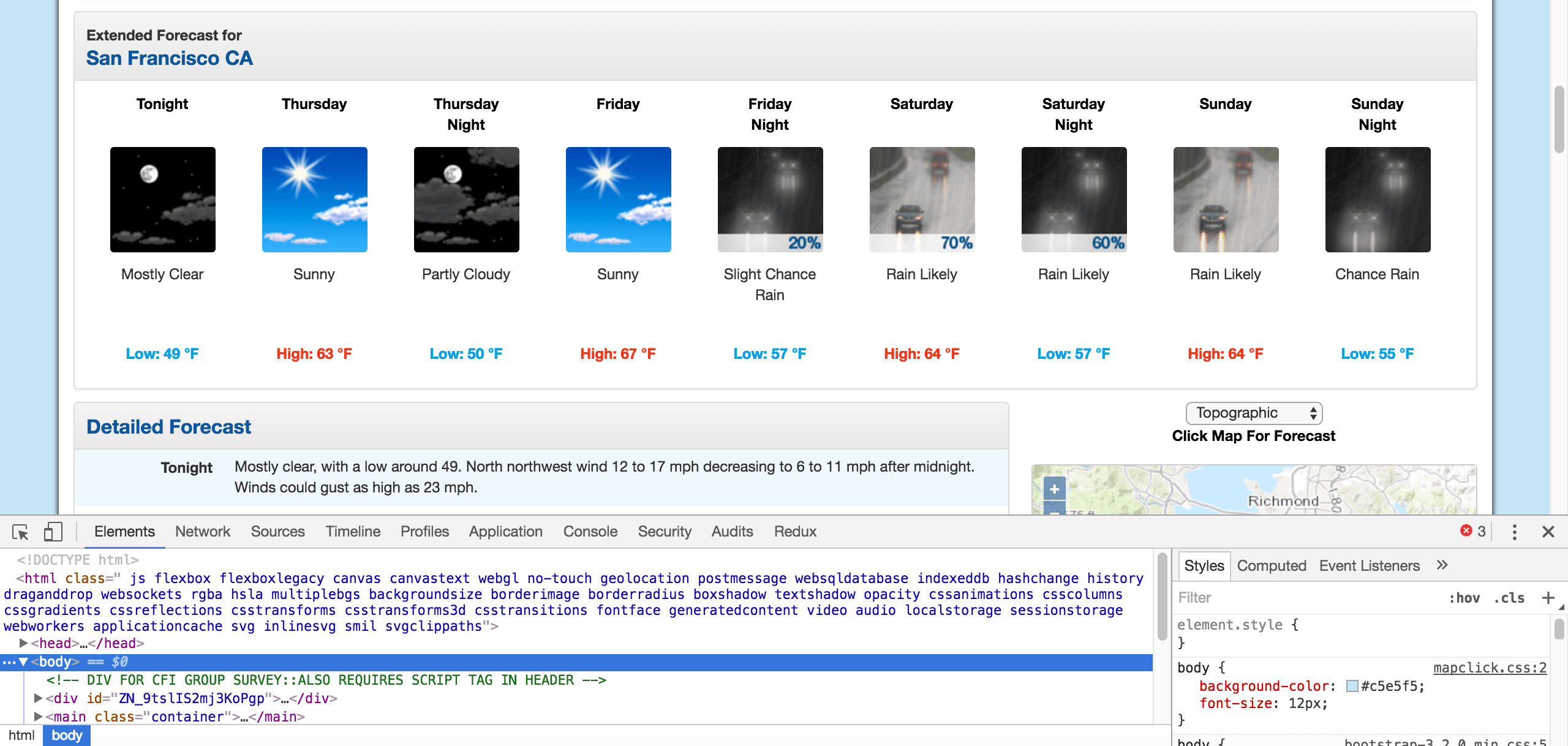

The commencement affair we'll need to do is inspect the page using Chrome Devtools. If you're using another browser, Firefox and Safari take equivalents.

You lot can beginning the developer tools in Chrome by clicking View -> Developer -> Developer Tools. You should end up with a panel at the bottom of the browser like what you see below. Make sure the Elements console is highlighted:

Chrome Programmer Tools

The elements panel will show you all the HTML tags on the page, and let you navigate through them. It's a really handy feature!

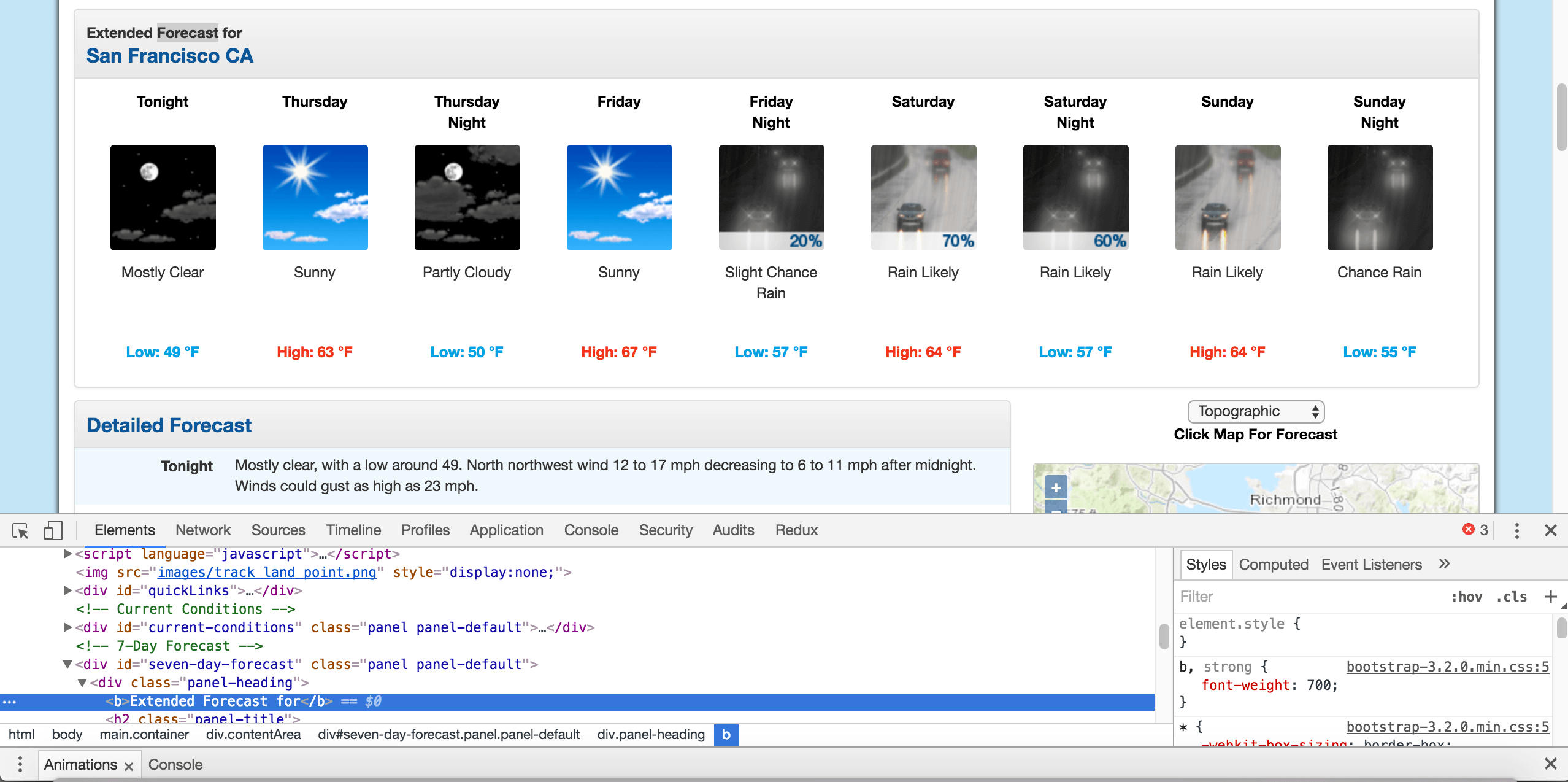

By right clicking on the folio near where it says "Extended Forecast", then clicking "Inspect", we'll open the tag that contains the text "Extended Forecast" in the elements panel:

The extended forecast text

We can then ringlet upwards in the elements console to find the "outermost" element that contains all of the text that corresponds to the extended forecasts. In this case, it's a div tag with the id seven-day-forecast:

The div that contains the extended forecast items.

If nosotros click around on the console, and explore the div, we'll observe that each forecast item (similar "Tonight", "Thursday", and "Thursday Night") is independent in a div with the course tombstone-container.

Fourth dimension to Get-go Scraping!

We now know enough to download the page and start parsing it. In the below code, nosotros will:

- Download the spider web folio containing the forecast.

- Create a

BeautifulSoupgrade to parse the page. - Notice the

divwith idvii-day-forecast, and assign toseven_day - Within

seven_day, find each individual forecast item. - Extract and impress the first forecast item.

page = requests.go( "https://forecast.weather condition.gov/MapClick.php?lat=37.7772&lon=-122.4168" ) soup = BeautifulSoup(page.content, 'html.parser' ) seven_day = soup.find(id= "seven-day-forecast" ) forecast_items = seven_day.find_all(class_= "tombstone-container" ) tonight = forecast_items[ 0 ] print (tonight.prettify( ) ) <div class="tombstone-container"> <p class="period-name"> Tonight <br> <br/> </br> </p> <p> <img alt="Tonight: More often than not clear, with a depression around 49. West northwest wind 12 to 17 mph decreasing to half-dozen to eleven mph subsequently midnight. Winds could gust as loftier every bit 23 mph. " class="forecast-icon" src="newimages/medium/nfew.png" title="This evening: Mostly clear, with a depression around 49. West northwest wind 12 to 17 mph decreasing to 6 to 11 mph after midnight. Winds could gust as loftier every bit 23 mph. "/> </p> <p form="curt-desc"> Mostly Clear </p> <p class="temp temp-low"> Depression: 49 °F </p> </div> Extracting information from the folio

Equally we can run across, inside the forecast item this evening is all the information we want. In that location are four pieces of information nosotros can extract:

- The name of the forecast detail — in this case,

This evening. - The description of the conditions — this is stored in the

championshipproperty ofimg. - A brusque description of the conditions — in this instance,

Mostly Clear. - The temperature low — in this case,

49degrees.

Nosotros'll excerpt the proper noun of the forecast detail, the short clarification, and the temperature get-go, since they're all similar:

menses = tonight.find(class_= "menstruum-proper name" ) .get_text( ) short_desc = tonight.find(class_= "curt-desc" ) .get_text( ) temp = this night.observe(class_= "temp" ) .get_text( ) impress (period) print (short_desc) print (temp) Tonight By and large Articulate Low: 49 °F Now, we can excerpt the title aspect from the img tag. To do this, nosotros just treat the BeautifulSoup object like a dictionary, and pass in the attribute we want as a key:

img = this night.observe( "img" ) desc = img[ 'championship' ] print (desc) Tonight: More often than not clear, with a low around 49. Due west northwest wind 12 to 17 mph decreasing to vi to 11 mph after midnight. Winds could gust equally loftier as 23 mph. Extracting all the data from the page

Now that we know how to extract each individual slice of data, we tin can combine our knowledge with CSS selectors and list comprehensions to extract everything at one time.

In the below code, we will:

- Select all items with the class

period-proper nameinside an item with the classtombstone-containerinseven_day. - Apply a list comprehension to call the

get_textmethod on eachBeautifulSoupobject.

period_tags = seven_day.select( ".tombstone-container .period-name" ) periods = [pt.get_text( ) for pt in period_tags] periods ['Tonight', 'Thursday', 'ThursdayNight', 'Friday', 'FridayNight', 'Saturday', 'SaturdayNight', 'Sun', 'SundayNight'] As nosotros can see above, our technique gets u.s.a. each of the period names, in order.

We can employ the same technique to get the other three fields:

short_descs = [sd.get_text( ) for sd in seven_day.select( ".tombstone-container .short-desc" ) ] temps = [t.get_text( ) for t in seven_day.select( ".tombstone-container .temp" ) ] descs = [d[ "title" ] for d in seven_day.select( ".tombstone-container img" ) ] print (short_descs) print (temps) impress (descs) ['Generally Articulate', 'Sunny', 'Generally Clear', 'Sunny', 'Slight ChanceRain', 'Rain Likely', 'Pelting Probable', 'Pelting Likely', 'Take a chance Rain'] ['Low: 49 °F', 'High: 63 °F', 'Depression: 50 °F', 'High: 67 °F', 'Low: 57 °F', 'High: 64 °F', 'Low: 57 °F', 'High: 64 °F', 'Depression: 55 °F'] ['Tonight: Mostly clear, with a low effectually 49. West northwest air current 12 to 17 mph decreasing to 6 to 11 mph after midnight. Winds could gust as loftier as 23 mph. ', 'Thursday: Sunny, with a high well-nigh 63. North wind iii to 5 mph. ', 'Thursday Night: Mostly clear, with a low effectually 50. Light and variable wind becoming east southeast 5 to 8 mph afterward midnight. ', 'Friday: Sunny, with a high nigh 67. Southeast wind effectually nine mph. ', 'Friday Night: A 20 percent chance of rain afterwards 11pm. Partly cloudy, with a low around 57. S southeast wind 13 to 15 mph, with gusts as high as xx mph. New precipitation amounts of less than a tenth of an inch possible. ', 'Saturday: Rain probable. Cloudy, with a loftier about 64. Chance of atmospheric precipitation is lxx%. New precipitation amounts betwixt a quarter and one-half of an inch possible. ', 'Sat Dark: Rain likely. Cloudy, with a low around 57. Chance of atmospheric precipitation is 60%.', 'Sunday: Rain likely. Cloudy, with a high near 64.', 'Sun Night: A adventure of rain. Mostly cloudy, with a depression around 55.'] Combining our data into a Pandas Dataframe

We can now combine the data into a Pandas DataFrame and analyze it. A DataFrame is an object that can store tabular information, making data analysis like shooting fish in a barrel. If you desire to larn more well-nigh Pandas, check out our free to start course hither.

In lodge to do this, we'll call the DataFrame class, and pass in each list of items that we have. We laissez passer them in as part of a lexicon.

Each dictionary cardinal will become a cavalcade in the DataFrame, and each list will become the values in the column:

import pandas equally pd atmospheric condition = pd.DataFrame( { "period" : periods, "short_desc" : short_descs, "temp" : temps, "desc" :descs } ) weather | desc | menses | short_desc | temp | |

|---|---|---|---|---|

| 0 | Tonight: Mostly clear, with a low effectually 49. West… | This night | Mostly Clear | Low: 49 °F |

| i | Thursday: Sunny, with a high near 63. N wi… | Thursday | Sunny | Loftier: 63 °F |

| 2 | Th Night: Generally clear, with a low aroun… | ThursdayNight | More often than not Articulate | Depression: fifty °F |

| 3 | Friday: Sunny, with a high nearly 67. Southeast … | Friday | Sunny | High: 67 °F |

| 4 | Friday Night: A 20 percent chance of rain afte… | FridayNight | Slight ChanceRain | Low: 57 °F |

| five | Saturday: Rain likely. Cloudy, with a high ne… | Saturday | Rain Likely | Loftier: 64 °F |

| half dozen | Sabbatum Dark: Rain likely. Cloudy, with a l… | SaturdayNight | Rain Likely | Low: 57 °F |

| 7 | Lord's day: Rain likely. Cloudy, with a loftier nigh… | Sunday | Rain Likely | High: 64 °F |

| 8 | Sunday Night: A run a risk of rain. Mostly cloudy… | SundayNight | Chance Pelting | Low: 55 °F |

We can at present practice some analysis on the data. For instance, we tin use a regular expression and the Serial.str.extract method to pull out the numeric temperature values:

temp_nums = weather[ "temp" ] .str.extract( "(?Pd+)" , expand= False ) weather condition[ "temp_num" ] = temp_nums.astype( 'int' ) temp_nums 0 49 1 63 ii 50 3 67 4 57 v 64 six 57 7 64 eight 55 Proper name: temp_num, dtype: object We could then find the mean of all the high and depression temperatures:

atmospheric condition[ "temp_num" ] .mean( ) 58.444444444444443 We could also only select the rows that happen at night:

is_night = weather[ "temp" ] .str.contains( "Low" ) weather[ "is_night" ] = is_night is_night 0 True ane Simulated 2 True 3 False 4 True five Imitation 6 Truthful vii False 8 True Name: temp, dtype: bool weather condition[is_night] 0 True i Imitation two True iii False 4 True 5 False vi True 7 Imitation 8 True Proper name: temp, dtype: bool | desc | period | short_desc | temp | temp_num | is_night | |

|---|---|---|---|---|---|---|

| 0 | Tonight: Mostly clear, with a low around 49. W… | Tonight | Mostly Clear | Low: 49 °F | 49 | True |

| 2 | Thursday Nighttime: Mostly clear, with a low aroun… | ThursdayNight | Mostly Clear | Low: 50 °F | 50 | True |

| 4 | Friday Night: A 20 pct chance of rain afte… | FridayNight | Slight ChanceRain | Low: 57 °F | 57 | True |

| vi | Sat Nighttime: Rain likely. Cloudy, with a l… | SaturdayNight | Rain Likely | Low: 57 °F | 57 | True |

| 8 | Sunday Dark: A chance of rain. More often than not cloudy… | SundayNight | Take chances Rain | Depression: 55 °F | 55 | Truthful |

Next Steps For This Web Scraping Project

If you've made it this far, congratulations! You should now have a good understanding of how to scrape web pages and extract data. Of course, there'south still a lot more than to learn!

If you desire to go further, a good side by side step would exist to pick a site and attempt some web scraping on your ain. Some good examples of data to scrape are:

- News articles

- Sports scores

- Weather forecasts

- Stock prices

- Online retailer prices

You may also want to keep scraping the National Weather Service, and run across what other information you can extract from the folio, or about your own metropolis.

Alternatively, if you want to take your web scraping skills to the next level, you tin bank check out our interactive course, which covers both the basics of web scraping and using Python to connect to APIs. With those two skills under your belt, you'll be able to collect lots of unique and interesting datasets from sites all over the web!

Larn Python the Correct Fashion.

Learn Python by writing Python code from 24-hour interval one, right in your browser window. It'south the all-time way to acquire Python — run across for yourself with one of our 60+ free lessons.

Effort Dataquest

Tags

Source: https://www.dataquest.io/blog/web-scraping-python-using-beautiful-soup/

0 Response to "How to Read Html Source Code Python"

Post a Comment